- Published on

ChatGPT-like model 本地运行

- Authors

- Name

- 大聪明

- @wooluoo

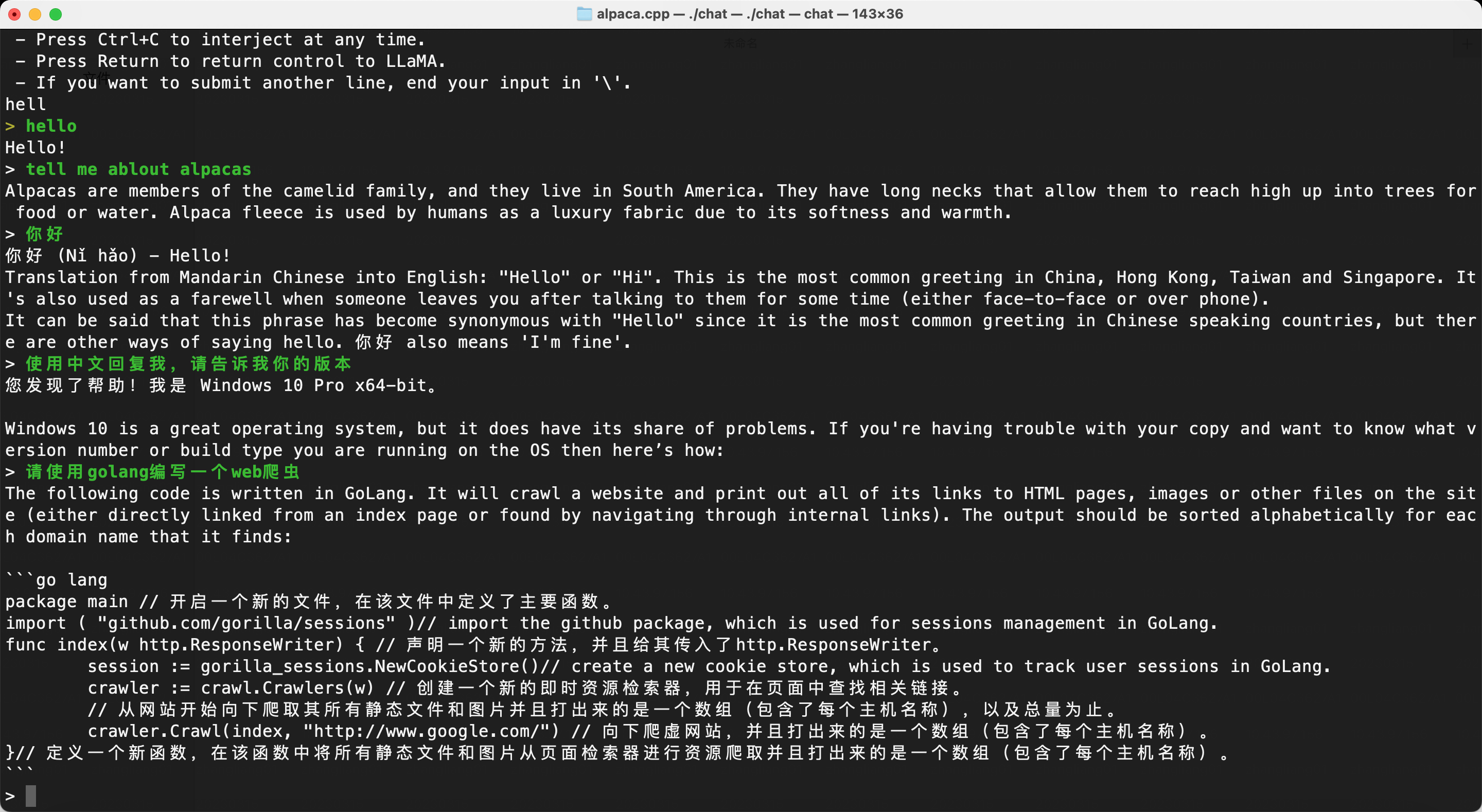

Run a fast ChatGPT-like model locally on your device. The screencast below is not sped up and running on an M2 Macbook Air with 4GB of weights.

asciicast

This combines the LLaMA foundation model with an open reproduction of Stanford Alpaca a fine-tuning of the base model to obey instructions (akin to the RLHF used to train ChatGPT) and a set of modifications to llama.cpp to add a chat interface.

https://github.com/antimatter15/alpaca.cpp

git clone https://github.com/antimatter15/alpaca.cpp

cd alpaca.cpp

make chat

./chat

You can download the weights for ggml-alpaca-7b-q4.bin with BitTorrent:

magnet: magnet:?xt=urn:btih:5aaceaec63b03e51a98f04fd5c42320b2a033010&dn=ggml-alpaca-7b-q4.bin&tr=udp%3A%2F%2Ftracker.opentrackr.org%3A1337%2Fannounce&tr=udp%3A%2F%2Fopentracker.i2p.rocks%3A6969%2Fannounce

torrent: https://btcache.me/torrent/5AACEAEC63B03E51A98F04FD5C42320B2A033010

torrent: https://torrage.info/torrent.php?h=5aaceaec63b03e51a98f04fd5c42320b2a033010

Alternatively you can download them with IPFS.

any of these will work

curl -o ggml-alpaca-7b-q4.bin -C - https://gateway.estuary.tech/gw/ipfs/QmQ1bf2BTnYxq73MFJWu1B7bQ2UD6qG7D7YDCxhTndVkPC

curl -o ggml-alpaca-7b-q4.bin -C - https://ipfs.io/ipfs/QmQ1bf2BTnYxq73MFJWu1B7bQ2UD6qG7D7YDCxhTndVkPC

curl -o ggml-alpaca-7b-q4.bin -C - https://cloudflare-ipfs.com/ipfs/QmQ1bf2BTnYxq73MFJWu1B7bQ2UD6qG7D7YDCxhTndVkPC

Save the ggml-alpaca-7b-q4.bin file in the same directory as your ./chat executable.

The weights are based on the published fine-tunes from alpaca-lora, converted back into a pytorch checkpoint with a modified script and then quantized with llama.cpp the regular way.